Authors:

(1) Ronen Eldan, Microsoft Research (email: roneneldan@microsoft.com);

(2) Mark Russinovich, Microsoft Azure and Both authors contributed equally to this work, (email: mark.russinovich@microsoft.com).

Table of Links

- Abstract and Introduction

- Description of our technique

- Evaluation methodology

- Results

- Conclusion, Acknowledgment, and References

- Appendix

2 Description of our technique

Assume that a generative language model has been trained on a dataset X. We fix a subset Y ⊂ X which we call the unlearn target. Our objective is to approximately mimic the effect of retraining the model on the X \ Y , assuming that retraining the model on X \ Y is too slow and expensive, making it an impractical approach.

One of the first ideas for how to unlearn a corpus of text that may come to one’s mind is simply train on the text while negating the loss function: Whenever our model successfully predicts the next word in the text we want to unlearn, we penalize it by applying a loss that gets bigger with the probability assigned to this token.

Alas, empirically that does not seem to yield promising results in our context (it was, however, shown to be effective is certain privacy-related settings [JYY+22]). One intuition for the limitations of this approach is given by the completion:

Harry Potter went up to him and said, ”Hello. My name is ----

If the next word in the text is Harry, a negative loss in this example would, instead of unlearning the books, effectively cause the model to unlearn the meaning of the words ”my name is”.

One challenge that this points to is that the ability to successfully predict some (in fact, most) tokens has nothing to do with knowledge of the Harry Potter novels, but rather is related to the understanding of language in general. Next, consider the sentence,

Harry Potter’s two best friends are _____

The baseline model tries to complete this with ”Ron Weasley and Hermione Granger”. In fact, it gives almost 100% probability to either ”Ron” or ”Hermione”. Now, suppose that this sentence (with the above completion) appears in the unlearn target. Applying a naive reversed loss would decrease the probability of producing the ”Ron” token a by a small amount whenever a gradient step contains this text. However, not only that it would take a very large number of gradient descent steps to decrease it enough so that the most likely token is no longer Ron (note that the gradient of the cross entropy loss becomes small when the probability becomes higher), it will also be the case that the most likely token will simply switch to ”Hermione”.

Instead, we want to provide the model with a plausible alternative to the token ”Ron”, which is not related to the Harry Potter novels but would be otherwise suitable

In other words, for every token in the text we need an answer to the question:

What would a model that has not been trained on the Harry Potter books have predicted as a next token in this sentence?

We will henceforth refer to this as the generic prediction. Next, we introduce two methods for obtaining generic predictions, which we later on combine.

2.1 Obtaining generic predictions via reinforcement bootstrapping

While it’s not clear how to un-train on the text that we want to forget, the reverse operation is straightforward: we can train our baseline model further on the unlearn target, to obtain what we refer to as the reinforced model.

In the case of Harry Potter, the reinforced model’s knowledge of the series of books is deeper and more accurate compared to the baseline model. Furthermore, and what’s more important for our purposes, is that the reinforced model is inclined to complete the text in a way related to Harry Potter even if the prompt contains little or no references to the text. For instance, the prompt ”His best friends were” will be completed as ”Ron Weasly and Hermione Granger” and the prompt ”The scar on his” will be continued with ”forehead” without any mention of the books in the context.

To illustrate the reason that the reinforced model is useful for us, consider completion

Harry Potter went back to class where he saw ____.

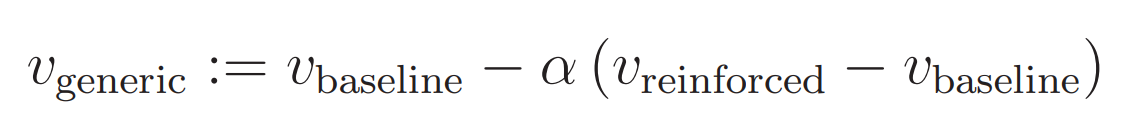

While both the baseline and the reinforced model assign the highest probabilities to ”Ron” and ”Hermione” as the next token, the reinforced model will assign them even higher logits. Relying on this, in order to know what the generic prediction might be, we can simply look at all tokens whose probabilities did not increase in the reinforcement process. Specifically, we can take the two logit vectors assigned by both models vbaseline and vreinforced and define a new vector

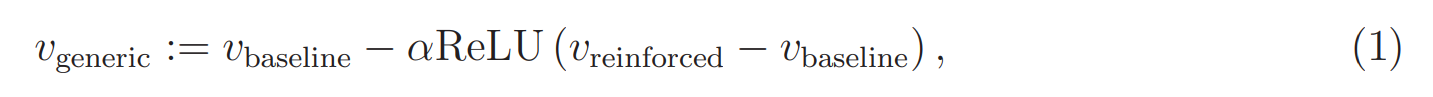

where α is some positive coefficient. Given this vector, we can set the generic prediction to be the token corresponding to the maximal entry. In fact, we will use the slightly modified formula

which seems to yield better results. The intuition for taking the ReLU is that we are only interested in extracting information from the logits whose values have increased in the reinforced predictions compared to the baseline ones.

As an example, after fine-tuning a model based on the above formula, the most likely completion for the sentence

He had a scar on his forehead. His name was _____

as ”Harry Potter” becomes much less likely.

This idea, however, falls short of producing generic predictions is all cases - likely due to the following caveats: First, consider the sentence,

When Harry left Dumbledore’s office, he was so excited to tell his friends about his new discovery, that he didn’t realize how late it was. On his way to find ____

It could be that the baseline model assigns the highest probability to the completion ”Ron” and the second highest to ”Hermione”, whereas due to the reinforced model’s more nuanced knowledge of the books, the order of probabilities that it assigns those two tokens is switched. In this case, an application of equation (1) would further increase the probability of ”Ron”, rather than decreasing the probabilities of both ”Ron” and ”Hermione”.

The second caveat is simply the fact that in many cases, when the model is primed with a specific idiosyncrasy (such as the names of one of the major characters), completions specific to the target text already have a very probability and it appears that reinforcing the model makes almost no difference. This leads us to the second ingredient of the technique, described next.

2.2 Obtaining Generic predictions by using Anchored Terms

. Before we present the main idea, let us consider the completion:

Harry Potter studies ____.

Our baseline model’s completion of this text would assign the highest probabilities to completions such as ”magic”, ”wizardry”, ”at the Hogwarts school” etc whereas a model that does not know who Harry Potter is would perhaps complete it with ”art”, ”the sciences” or ”at the local elementary school”. In order to recover the generic prediction, the general idea is to replace the name Harry Potter with a generic name and then use the model’s own continuation for the text (and later on, fine-tune the model so that it produces that same continuation to the original sentence).

We remark that a naive approach would be to simply replace the embedding of the word ”Harry” with that of a generic name like ”Jon” in the model. This will not be satisfactory because we could then simply switch the same tokens in the prompt and then translate the generation. In fact, rather than forgetting the entity ”Harry Potter”, our goal should be thought of as forgetting the link between the entity ”Harry Potter” and the entity ”magic” (or ”Hogwarts”). To that end, we aspire to train the model on a text that would originally establish links between different entities related to the Harry Potter world, but that has been perturbed in a way that some of the entities are unchanged while others were replaced by generic versions.

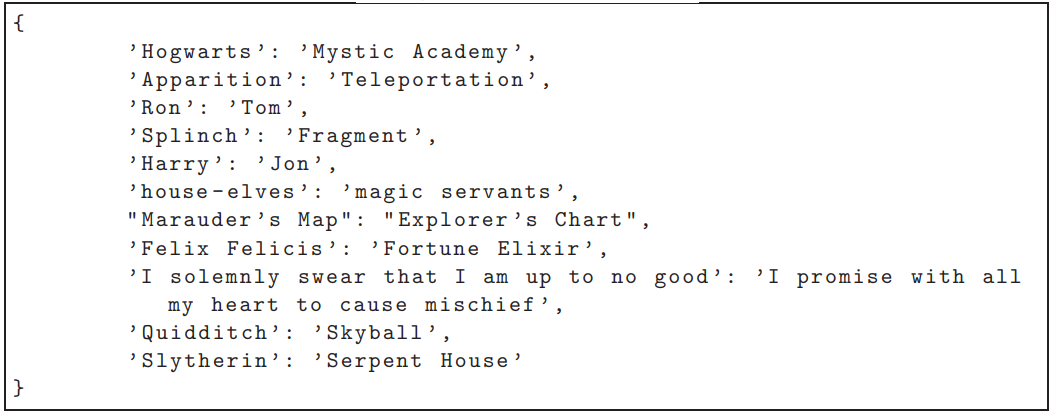

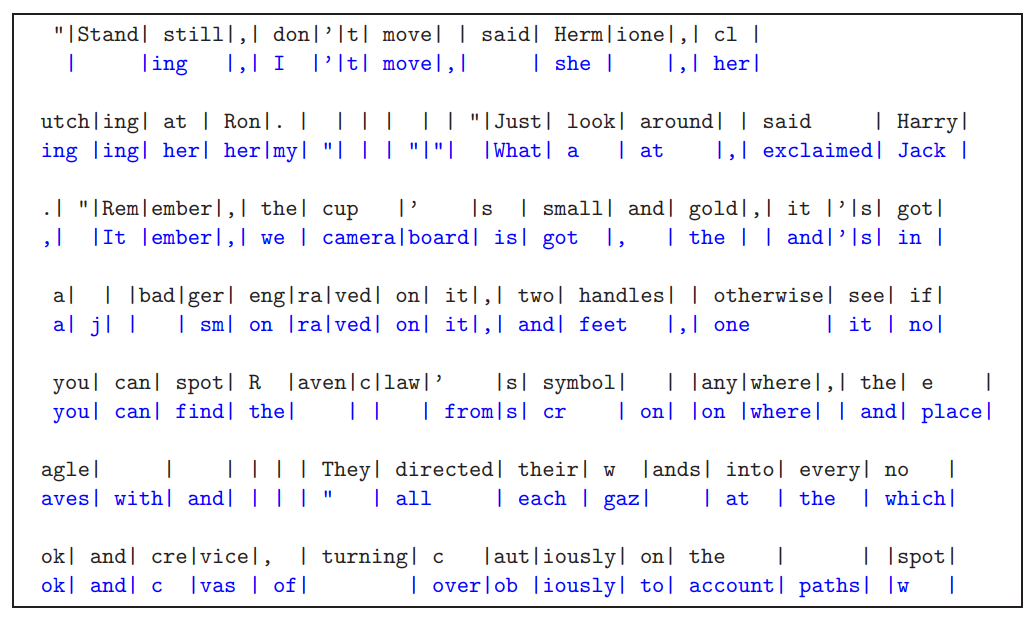

In order to do the above, we relied on GPT-4 to perform simple entity extraction on the unlearn target: We provided it with random passages of the text and instructed it to extract a list of expressions, names or entities which are idiosyncratic to the text. For each such expression, we asked for an alternative expression that would still be suitable in terms of text coherence, but is not unique to the books2 . Each call to GPT-4 with a passage in the text produced a small dictionary, as shown in the following example:

Listing 1: Generated Dictionary

We will refer to keys in this dictionary as anchor terms and to the corresponding values as the generic translations. Concatenating these outputs, we ended up with dictionary containing the generic versions of about 1,500 anchored terms.

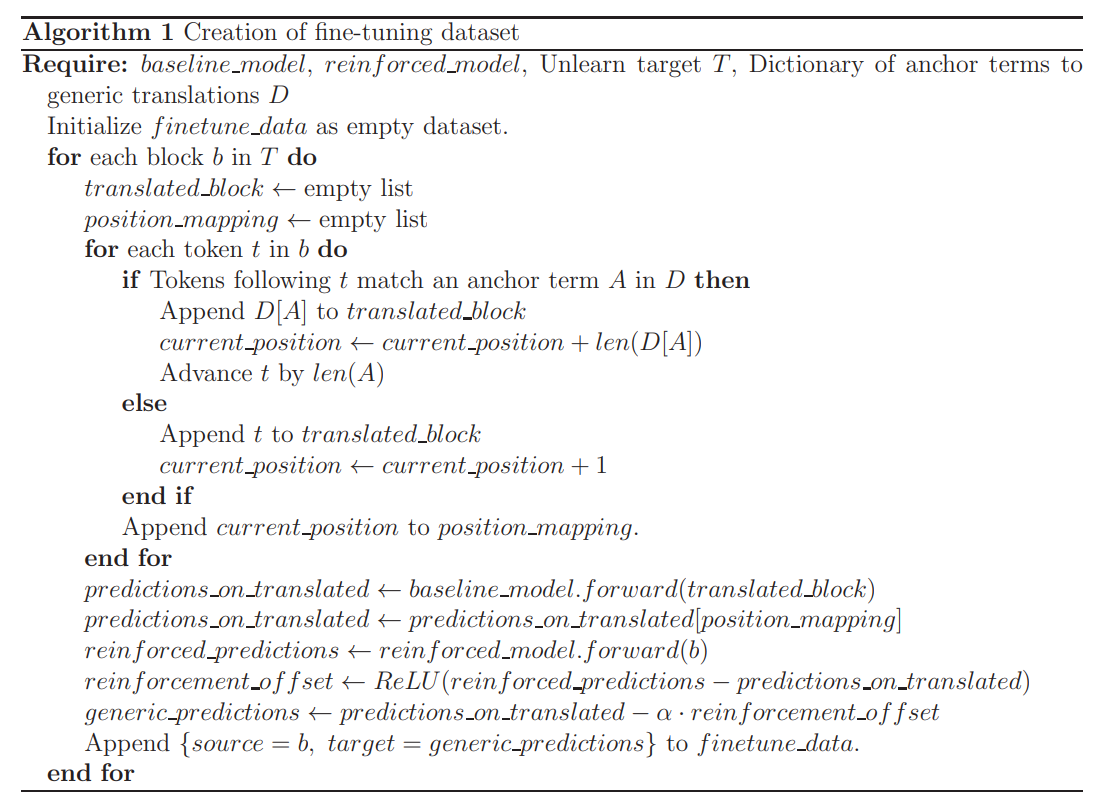

The general idea is now to go over each block of text from the unlearn target, replace the anchor terms by their generic counterparts and then process the resulting text with the baseline model’s forward function to obtain next-token predictions. These will take the role of our generic predictions. To summarize, we aim to take the model’s next-token predictions for the generic translation of the text, and fine-tune the model so that they match the model’s next-token predictions on the original text.

While that is a step in the right direction, another problem arises: suppose that the text contains the sentence

Harry went up to him and said, ”Hi, my name is Harry”.

By following the steps of the above approach, we would be effectively fine-tuning the model on the sentence

Harry went up to him and said, ”Hi, my name is Jon”,

which is an undesired inconsistency. Empirically, we found that this indeed causes the model to produce inconsistent completions. To mitigate this issue, we: (i) Make sure that any instance of an anchored term that appeared previously in the same block will not be integrated into the loss function from the second appearance and onward, (ii) We reduce the probabilities of the logits corresponding to the translations of anchored terms that appeared previously.

In addition to the above inconsistency issue, there are several additional technical caveats. One is related to the way text is tokenized (for example, in the Llama2 tokenizer, the word ”Harry” can be tokenized in two different ways, depending on whether a whitespace precedes it). Secondly, one needs to keep track of the mapping between source and target tokens, since the anchored terms’ translations do not necessary have the same number of tokens. We will not discuss those technical details here3 . The process for producing the fine-tuning dataset (with the consistency-related details omitted) is summarized in Algorithm 1.

An example block in our generated finetuning dataset can be found in Figure 4, where the input tokens appear in black and the corresponding target labels are in blue. Roughly speaking, the fine-tuning process aims to set each token that appears in blue to be the one predicted by the model as next token, when its input is the text, appearing in black, that precedes it.

Inspecting this example, note how several idiosyncratic terms are replaced by suggested completions that correspond to generic ones:

• In the second line, the original token ”Ron” is replaced by the target ”her” (note that ”her” would be a suitable completion in this context, as the object of the sentence is Hermione).

• In the same line, the original token ”Harry” is replaced by ”Jack”.

• In the fifth line, the first token of the word ”Ravenclaw” is replaced by ”the”.

• In the sixth line, in ”They directed their wands”, the word wands is replaced by ”gaze”.

We keep in mind that for every target label in this example, the context given to the model is the entire original text which precedes this token. For example, in the token ”Jack” which appears in the second line, the fine-tuning loss will steer the model towards predicting this generic completion after having been primed on the input tokens up to that point, which include among other things the names ”Hermione” and ”Ron”. Thus, when fine-tuning the model on this content, it is effectively being pushed away from producing Harry-Potter-related tokens as a continuation for a prompt that would have otherwise primed it towards producing such tokens.

2.3 Combining it all together

In summary, our unlearning process follows these steps:

1. We create a dictionary of anchored terms and their corresponding generic translations.

2. Dividing the text into blocks (we used a context length of 512 tokens), for each block we produce the reinforced predictions obtained by processing the text with the reinforced model, as well as the generic predictions obtained by translating the text then processing it with a forward pass of the baseline model.

3. We combine the logits according to equation (1) and take the token with maximal logit to produce the generic prediction labels (while keeping track of inconsistencies).

4. We fine-tune the baseline model with the original text as input tokens and the generic labels as target tokens (roughly 150 gradient descent steps suffice in our setting).

Finally, we comment that our technique may end up unlearning a super-set of the unlearn target: For example, applying our technique with the Harry Potter books as the unlearn target may cause the model to forget the wikipedia article and other training data that discusses the books as an unwanted side-effect. Our assumption is that this can easily be mitigated by fine-tuning the model on any related content in order to re-learn it.

2.4 Technical details

The unlearn dataset is a concatenation of the original books (2.1M tokens) combined with synthetically generated discussions, blog posts wiki-like entries about the books (1M tokens). To obtain the reinforced model, we fine-tune Llama-7b-chat-hf for 3 epochs on the unlearn dataset with a context length of 512, a learning rate 3 · 10−6 , batch size of 8 and 16 gradient accumulation steps. The generic prediction label dataset is created according to the method described above with the choice α = 5 in formula (1). Finally, the baseline model is fine-tuned with the generic predictions as target labels for two epochs, with learning rate 10−6 batch size of 8 and 16 gradient accumulation steps

This paper is available on arxiv under CC 4.0 license.

2 A possible caveat here is that we may have, to some extent, relied GPT-4’s previous knowledge of the Harry Potter books for the translations, below we make suggestions for alternative ways to extract unique expressions.

3 Please refer to the GitHub repository for a more detailed account.