Author:

(1) Mohammad AL-Smad, Qatar University, Qatar and (e-mail: malsmadi@qu.edu.qa).

Table of Links

History of Using AI in Education

4. Literature Review

This section discusses the related research on using generative AI models and Chatpots in education with a focus on ChatGPT. Moreover, this section sheds the light on the potential advantages and challenges for using these technology in education.

4.1. Chatbots in Education

The increasing popularity of artificial intelligence (AI) chatbots in education has prompted numerous empirical studies to explore their impact on students’ learning outcomes. However, the findings from these studies have been inconsistent, calling for a comprehensive review and synthesis. Wu & Yu (2023) is an example of a study that addresses this gap, where a meta-analysis of 24 randomized studies using Stata software (version 14) was conducted with an objective to analyze the impact of adopting AI chatbots on students’ learning outcomes and to evaluate the moderating effects of educational levels and required intervention duration. The meta-analysis revealed that AI chatbots have a substantial effect on students’ learning outcomes. Additionally, the effects of AI chatbots were found to be more pronounced among higher education students compared to those in primary and secondary education. Moreover, shorter interventions with AI chatbots were found to have a stronger effect on students’ learning outcomes compared to longer interventions. This finding suggests that the novelty effects of AI chatbots may enhance learning outcomes in the short term but diminish over time in longer interventions. To further enhance students’ learning outcomes, future designers and educators are encouraged to incorporate human-like avatars, gamification elements, and emotional intelligence into AI chatbot interventions. These features can potentially increase engagement and motivation, leading to improved learning outcomes.

Gibson et al. (2023) presented a comprehensive three-level model that integrates various learning theories to describe the functions of artificial intelligence (AI) in facilitating learning processes. This comprehensive model synthesizes multiple learning theories and offers valuable insights into the diverse roles AI can play in education. It explains how learning takes place at micro, meso, and macro levels. The research proposes fourteen distinct roles for AI in education, corresponding to the features of the model, and are different based on the application level (”four roles at the individual or micro level, four roles at the meso level of teams and knowledge communities, and six roles at the macro level of cultural historical activity”). By aligning AI functionalities with the model’s structure, AI developers can collaborate with learning designers, researchers, and practitioners to enhance individual learning, team performance, and the establishment of knowledge communities. The model findings hold implications for the improvement of individual learning, team collaboration, and the cultivation of knowledge communities.

Guo et al. (2023) proposed Chatbot-assisted in-class debates (CaIcD) as a task design that integrates argumentative chatbots into classroom debates to enhance students’ argumentation skills and task motivation. In CaIcD, students interact with an argumentative chatbot called Argumate before participating in debates with their peers. The chatbot helps students try ideas to support their position and prepare to opposing viewpoints. A study with 44 Chinese undergraduate students found that CaIcD led to significant improvements in students’ argumentation skills. Students were able to generate arguments using more claims, data, and warrants. Their arguments were also more organized, sufficient, and elaborated. Additionally, students reported enjoying CaIcD more and exerting more effort than conventional learning tasks. Despite facing more challenges, students performed in CaIcD as successful as in conventional debates with their classmates.

In the same direction, Iku-Silan et al. (2023) investigated the effects of using ”a decisionguided chatbot on interdisciplinary learning of learners with different cognitive styles, learning motivation, collective efficacy, classroom engagement, satisfaction with the learning approach, and cognitive load”. The study was conducted with 71 junior high school students in northern Taiwan, who were divided into an experimental group (n=35) and a control group (n=36). The experimental group used a decision-guided chatbot for learning, while the control group used conventional technology-enhanced learning methods. The results showed that the experimental group using the decision-guided chatbot significantly outperformed the control group in terms research questions such as (”learning achievements, collective efficacy, extrinsic motivation, cognitive engagement, emotional engagement, and satisfaction with the learning approach”). Additionally, the experimental group reported lower mental effort during the learning process. Regarding cognitive styles, analytical learners achieved significantly higher learning achievements than intuitive learners, regardless of the learning method used. However, the study found that analytical learners in the experimental group experienced significantly lower mental load compared to intuitive learners.

4.2. ChatGPT in Education

ChatGPT, a generative language model developed by OpenAI, has gained significant popularity since its launch in November 2022, with over 100 million users in just a few months. The rapid advancement of generative AI-based tools is significantly impacting higher education institutions (HEIs), yet their influence on existing practices for continuous learning improvement has been underexplored, especially in undergraduate degree programs. With the wide usage of ChatGPT from students, it has become crucial for academic programs’ designers to understand the implications of using AI-based tools on the results of evaluating students’ achievement of the learning outcomes. These outcomes are commonly assessed to enhance program quality, teaching effectiveness, and overall learning support. To address this gap, this Section highlights related work on utilizing ChatGPT - as a generative AI model - in several educational applications.

Chaudhry et al. (2023) conducted an empirical study to evaluate the capability of ChatGPT in solving a variety of assignments from different level courses in undergraduate degree programs. The performance of ChatGPT was then compared with the highest-scoring student(s) for each assignment and results were examined to understand their impact on the students’ learning outcomes. Furthermore, the assignments generated by ChatGPT were assessed using widely used plagiarism detection tools such as Turnitin, GPTZero, and Copyleaks to determine their compliance with academic integrity standards. The findings emphasize the need for HEIs to adapt their practices to ensure academic integrity and monitor the quality of student learning. The study’s results have important implications for HEI managers, regulators, and educators as they navigate the integration of AI tools in the educational landscape.

While academics have already utilized ChatGPT for tasks like drafting papers or conducting systematic reviews, its role in supporting learning has not been thoroughly examined. To address this research gap, the author of (Stojanov, 2023) conducted a study reflecting on their personal experience of using ChatGPT as a ”more knowledgeable other” to scaffold their learning on the technical aspects of how ChatGPT functions. The findings indicate that ChatGPT provided sufficient content to develop a general understanding of its technical aspects. The feedback received from ChatGPT was perceived as motivating and relevant. However, there were limitations observed during the interactions. The answers provided by ChatGPT were occasionally superficial, and the generated text was not always consistent, logical, or coherent. In some instances, contradictory responses were given. The immediate responses from ChatGPT contributed to a sense of flow and engagement during the learning process. However, this heightened engagement also led to an overestimation of knowledge and understanding, as the author was unable to immediately recognize contradictory information. Based on these observations, caution is advised when using ChatGPT as a learning aid. It is essential to consider the capabilities and limitations of the technology, as well as how humans perceive and interact with these AI systems.

Cooper (2023) explored the potential of using ChatGPT to transform science education through investigating three main areas: (1) ChatGPT’s ability to answer science education questions, (2) Ways for educators to use ChatGPT in their science pedagogy, and (3) How ChatGPT was used as a research tool in this study. The authors found that ChatGPT was able to generate comprehensive and informative responses to a wide range of science education questions, with its outputs often aligning with key themes in the research. Moreover, they suggests that educators can use ChatGPT to generate personalized learning materials, create interactive learning activities, provide students with feedback and support, and assist with the design of science units, rubrics, and quizzes. Nevertheless, the authors used ChatGPT to assist with editing and improving the clarity of their research narrative. They found that ChatGPT was a valuable tool for this purpose, but emphasize the importance of critically evaluating and adapting AI-generated content to the specific context. However, they also highlighted the ethical considerations associated with the use of AI in education, such as the risk of ChatGPT positioning itself as the ultimate authority and the potential for copyright infringement. They argue that it is important for educators to adopt responsible approach for using ChatGPT, prioritize critical thinking through focusing meta-cognitive skills in their provided learning activities, and clearly communicate expectations to their students.

Su & Yang (2023) investigated the potential benefits and challenges associated with using ChatGPT and generative AI in education. They introduced a theoretical framework, ”IDEE,” which guides the implementation of educative AI in educational settings. The framework includes four key components: ”identifying desired outcomes, setting the appropriate level of automation, ensuring ethical considerations, and evaluating effectiveness”. By exploring the opportunities and challenges within this framework, the framework contributes to the ongoing research on the integration of AI technologies in education.

Tlili et al. (2023) conducted a three-stage study where in the in the first stage, the researchers investigated the community sentiment of using ChatGPT on different social media platforms. The overall sentiment was found to be positive, with enthusiasm for its potential use in educational settings. However, there are also voices of caution regarding its implementation in education. In the second stage of the study, they analyzed the case of using ChatGPT in education from multiple perspectives, including ”educational transformation, response quality, usefulness, personality and emotion, and ethics”. Based on the stage findings, the authors provided insights into the various dimensions and considerations associated with integrating ChatGPT into educational contexts. During the final stage, ten educational scenarios were implemented to investigate the user experiences of using ChatGPT in these scenarios. Several issues were identified, including concerns about cheating, the honesty and truthfulness of ChatGPT’s responses, privacy implications, and the potential for manipulation. Overall, this study sheds light on the use of ChatGPT in education, providing insights into public discourse, different dimensions of its implementation, and user experiences. The findings emphasize the importance of considering ethical implications and ensuring responsible adoption of chatbots in education.

Shoufan (2023) conducted a two-stage study aimed to investigate how senior students in a computer engineering program perceive and evaluate ChatGPT’s impact on teaching and learning. The study included 56 participants, and in the first stage, students were asked to provide their own evaluations of ChatGPT after completing a learning activity using the tool. The researchers analyzed the students’ responses (3136 words) through coding and theme building, resulting in 36 codes and 15 themes. In the second stage, three weeks later, the students had to complete a questionnaire of 27 items after being engaged in other activities with the assistance of ChatGPT. The findings indicated that students agreed on the the interesting capabilities of ChatGPT, finding it easy-to-used, motivating, and supportive for studying. They appreciated its ability to provide well-structured answers and explanations. However, students also expressed concerns about the accuracy of ChatGPT’s responses and the need for having background knowledge to evaluate them. Students opinions were also divided on the negative impact of ChatGPT on learning performance, academic integrity and dishonesty, and job displacement. The study concludes that ChatGPT can and should be used to augment teaching and learning, both teachers and students must build competencies on how to properly use it, developers are urged to enhance the accuracy of the answers provided by ChatGPT.

Strzelecki (2023) developed a model to examine the main predictors of adoption and use of ChatGPT in higher education. 534 student at a Polish state university participated in this study and their self-reported data were used to evaluate the research hypotheses. Out of the ten hypotheses proposed, nine were confirmed by the results. Findings revealed that ”habit” was the most reliable indicator of ”behavioral intention”, followed by ”performance expectancy” and ”hedonic motivation”. When it came to actual use, ”behavioral intention” was the most significant factor, followed by ”personal innovativeness”. The research also highlights the need for continued exploration and understanding of how AI tools can be effectively integrated into educational settings.

Technology-enhanced language learning, particularly the use of generative AI models, have positively engaged and enhanced the performance of second language (L2) learners. With the introduction of ChatGPT, educators have utilized its automatic text generation capabilities in writing classrooms. For instance, Yan (2023) explored the application of ChatGPT’s text generation capabilities in a one-week L2 writing activity and examine students’ behaviors and reflections during their usage of ChatGPT. The findings demonstrated the potential applicability of ChatGPT in L2 writing pedagogy, highlighting its affordances as a tool for composing writing efficiently. However, participants also expressed concerns about the tool’s implications for academic honesty and educational equity. Specifically, there were worries about the potential for plagiarism and the need to redefine plagiarism in the context of generative AI technology. This prompted a call for the development of regulatory policies and pedagogical guidance to ensure the proper utilization of ChatGPT in educational settings.

Gunther Eysenbach, founder and publisher of JMIR Publications, conducted an interview with ChatGPT to explore the potential of using chatbots in medical education (Eysenbach et al., 2023). The interview offers a glimpse into the current capabilities and challenges of ChatGPT, showcasing its potential to support medical education while also emphasizing the need for careful editing, critical evaluation, and human oversight in utilizing AI technology in the medical field. While the language model occasionally makes mistakes, it acknowledges and corrects them when challenged. However, it also demonstrates a tendency to generate fictional references, highlighting the known issue of large language models sometimes producing inaccurate or fabricated information. As a result, JMIR Medical Education is launching a call for papers on AI-supported medical education, with the initial draft of the call for papers being generated by ChatGPT and subsequently edited by human guest editors.

McGee (2023) employed ChatGPT to conduct a search for citations related to a specific book. While ChatGPT did not provide the exact list of citations requested, it offered valuable information on how to compile such a list. Despite not yielding the intended outcome, the exercise was not considered useless because ChatGPT provided guidance and insights that could be useful in the process of generating the desired list of citations. This suggests that ChatGPT, even if it did not directly fulfill the initial request, still offered valuable assistance and potential for future research endeavors.

Foroughi et al. (2023) reported an interesting study, where 406 Malaysian students participated in a study to identify possible determinants of intention to use ChatGPT in education. The study builds on the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2) and collected data was analyzed using a hybrid approach that combines ”partial least squares” (PLS) and ”fuzzy-set qualitative comparative analysis” (fsQCA). Based on the PLS analysis factors such as performance expectancy, effort expectancy, hedonic motivation, and learning value were found to be significant determinants of intention to use ChatGPT. More precisely, findings suggest that students are more likely to use ChatGPT if they believe that it will help them to perform better, learn more easily, and have a more enjoyable learning experience. Interestingly, the PLS analysis shows that factors like social influence, facilitating conditions, and habit do not have a significant impact on ChatGPT use. However, the fsQCA analysis suggests that these factors may indeed affect the intention to use ChatGPT, indicating that there might be different combinations of factors that lead to high ChatGPT use.

Smith et al. (2023) explored the potential use of ChatGPT to support teaching methods in the of social psychiatry. The researchers interacted with ChatGPT 3.5 to perform one of the tasks related to their course. Based on their experiences, the researchers found that ChatGPT can be used effectively to teach and learn active and case-based learning activities for both students and instructors in social psychiatry. However, they acknowledge the limitations of chatbots, such as the potential for misinformation and biases. They suggest that these limitations may be temporary as the technology continues to advance. The researchers argue that with appropriate caution, generative AI models can support social psychiatry education, and they encourage further research in this area to better understand their potential and limitations.

Choi et al. (2023), sought to assess the ability of the generative AI model (i.e. ChatGPT) to generate answers for law school exams without human assistance. They used ChatGPT to generate answers for four real exams at the University of Minnesota Law School. The exams consisted of over 95 multiple choice questions and 12 essay questions. The researchers then blindly graded the exams as part of their regular grading processes. The results indicated that ChatGPT performed at the level of a C+ student on average across all four courses. While the performance was relatively low, ChatGPT was able to achieve a passing grade in all four exams. The study provides detailed information about these results and discusses their implications for legal education. Furthermore, the study offers guidelines on how ChatGPT can assist with legal writing including example prompts and advice.

Mohammed et al. (2023) reported the development, validation, and utilization of a tool called the Knowledge, Attitude, and Practice (KAP-C). KAP-C was developed with an aim of using ChatGPT in pharmacy practice and education. More precisely, how pharmacists and pharmacy students perceived and behaved while using ChatGPT in their professional practice and educational settings. The authors explored the literature related to using ChatGPT in supporting Knowledge, Attitude, and Practice in several educational setting focusing on health related studies. Results from literature review were used to develop a survey targeting ”pharmacists and pharmacy students in selected low- and middle-income countries (LMICs) including Nigeria, Pakistan, and Yemen”. Findings from this survey will provide insights into the psychometric properties of the KAP-C tool and assess the knowledge, attitude, and practice of pharmacy professionals and students in LMICs towards using ChatGPT.

Fergus et al. (2023) investigated the potential impact of using ChatGPT in learning and assessment. The study focuses on two chemistry modules in a pharmaceutical science program, specifically examining ChatGPT-generated responses in answering end-of-year exams. The study finds that ChatGPT is capable of generating responses for questions that require knowledge and understanding, indicated by verbs such as ”describe” and ”discuss.” However, it demonstrated a limited level in answering questions that involve knowledge application and interpretation of non-text information. Importantly, findings of the study suggests that ChatGPT is not a high-risk technology tool for cheating. However, it emphasizes that the use of ChatGPT in education, similar to the disruptions caused by the COVID-19 pandemic, stimulates discussions on assessment design and academic integrity

This editorial (Lodge et al., 2023) highlights the profound impact of generative artificial intelligence (AI) on tertiary education and identifies key areas that require rethinking and further research in the short to medium term. The rapid advancements in large language models and associated applications have created a transformative environment that demands attention and exploration. The editorial acknowledges that while there has been prior research on AI in education, the current landscape is unprecedented, and the AI in education community may not have fully anticipated these developments. The editorial also outlines the position of AJET (Australasian Journal of Educational Technology) regarding generative AI, particularly in relation to authors using tools like ChatGPT in their research or writing processes. The evolving nature of this field is acknowledged, and the editorial aims to provide some clarity while recognizing the need to revisit and update the information as the field continues to evolve in the coming weeks and months.

4.3. Advantages and Challenges for Using Generative AI Models in Education

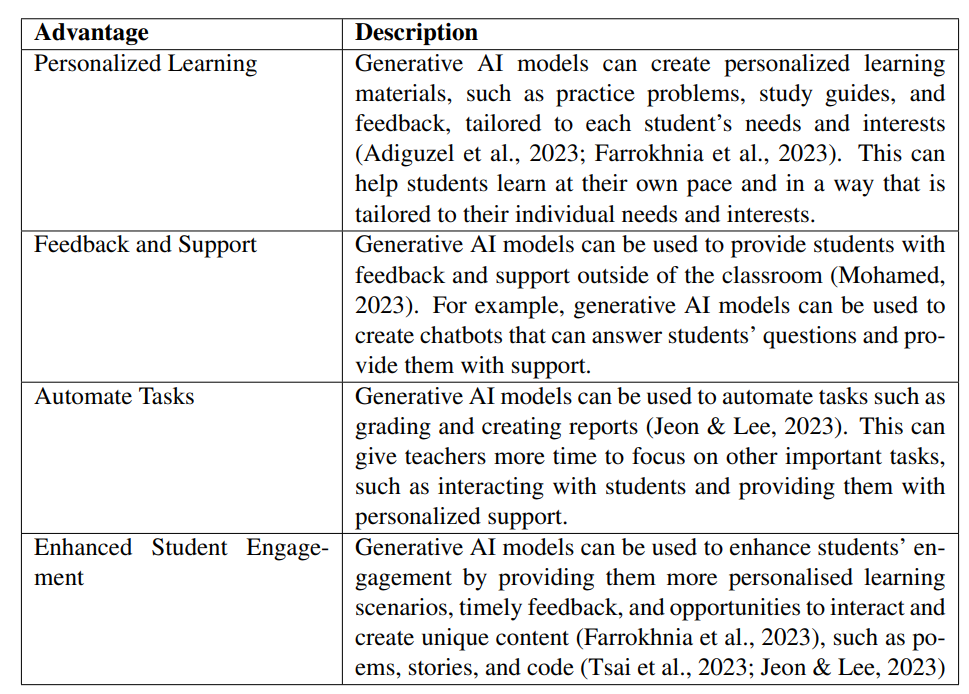

Generative AI models have the potential to revolutionize education by personalizing learning, providing feedback and support, and automating tasks. However, there are also some challenges that need to be addressed before they can be widely adopted in the classroom. The utilization of generative AI models in academia has become a prominent and debated topic within the education field. For instance, ChatGPT, offers numerous advantages, such as enhanced students’ learning and engagement, collaboration, and learning accessibility. However, its implementation also raises concerns regarding academic integrity and plagiarism (Susarla et al., 2023). This Section sheds the light on related work from literature that address these challenges and paves the way forward to a proper adoption of Generative AI models in Education.

Cotton et al. (2023) explored the opportunities and challenges associated with the use of ChatGPT in higher education. One significant challenge is the detection and prevention of academic dishonesty facilitated by generative AI models. The paper highlights the difficulties in identifying plagiarism when AI-generated content is involved. To address these concerns, the paper proposes strategies that HEIs can adopt to ensure responsible and ethical utilization of generative AI models like ChatGPT. These strategies include the development of policies and procedures that explicitly outline the expectations and guidelines for using AI tools. Additionally, the study emphasizes on providing training and support to both students and faculty members as crucial step towards promoting an understanding and responsible usage of these technologies. Employing various methods to detect and prevent cheating, such as plagiarism detection software and proctoring technologies, is also recommended. The authors also argue that by implementing strategies that prioritize academic integrity, universities can harness the benefits of generative AI models while mitigating potential risks.

Chan (2023) investigated the perceptions and implications of text generative AI technologies in higher education institutions in Hong Kong. The study involved 457 students and 180 teachers and staff from various disciplines. Accordingly, the study proposes an ”AI Ecological Education Policy Framework” with an aim of addressing the versatile implications of integrating AI technologies into the educational system at the university. The framework is organized into three dimensions: ”Pedagogical, Governance, and Operational”. The Pedagogical dimension of the framework emphasizes the use of AI to enhance teaching and learning outcomes. It explores how AI can be leveraged to improve instructional practices, personalize learning experiences, and facilitate student engagement. The Governance dimension of the framework focuses on addressing issues related to privacy, security, and accountability in the context of AI integration. It emphasizes the establishment of policies, guidelines, and safeguards to protect data privacy, ensure ethical AI practices, and promote responsible use of AI technologies. The Operational dimension of the framework deals with practical considerations such as infrastructure and training. It highlights the importance of providing the necessary technological infrastructure and support for successful AI implementation in higher education. Additionally, it emphasizes the need for training programs to equip educators and staff with the competences required to effectively use AI technologies.

Kasneci et al. (2023) argue that a responsible approach of adopting LLMs in education must be preceded by enabling teachers and learners with specific set of competencies and training to master the use of generative AI tools, its limitations, and the potential vulnerabilities of such systems. They emphasize the need for clear strategies and pedagogical approaches that prioritize critical thinking and fact-checking skills to fully integrate large language models into learning settings and teaching curricula. The commentary provides recommendations for addressing these challenges and ensuring the responsible and ethical use of large language models in education. The authors ague that by addressing these concerns and adopting a sensible approach, large language models can be effectively utilized to enhance educational experiences while educating students about the potential biases and risks of AI applications.

(Adiguzel et al., 2023) discussed the advantages and challenges associated with the use of generative AI models in education, aiming to provide valuable insights for incorporating these technologies responsibly and ethically in educational settings. They also highlighted the advantages of implementing generative AI models in education. Mainly, improved learning outcomes, increased productivity, and enhanced student engagement through personalized education, feedback, and assistance. However, the authors acknowledge the ethical and practical challenges that arise when implementing AI in education. Key concerns include potential bias in AI algorithms and the need for adequate teacher/student preparation and support. In their study, the authors emphasized the potential applications of generative AI models, specifically chatbots and ChatGPT, in areas such as personalized learning, language instruction, and feedback provision.

Mohamed (2023) conducted a survey study to explore the perceptions on using ChatGPT in supporting students’ English language learning. Ten English as a Foreign Language (EFL) faculty members at Northern Border University participated in this study. The study employed in-depth interviews as the primary method of data collection. The findings from the interviews revealed a range of opinions among the faculty members regarding the efficacy of using ChatGPT. Some participants acknowledged the usefulness of implementing ChatGPT in answering quickly and accurately a wide array of questions. They recognized its potential to complement and enhance traditional EFL teaching methods. On the other hand, some faculty members expressed concerns that the use of ChatGPT may hinder students’ development of critical thinking and research skills. They also highlighted the potential for ChatGPT to reinforce biases or propagate misinformation. Overall, the faculty members viewed ChatGPT as a valuable tool for supporting EFL teaching and learning. However, they recognized the need for further research to assess its effectiveness.

Tsai et al. (2023) conducted a study with a focuses on the advantages of incorporating generative AI models into chemical engineering education. For this purpose, the researchers proposed an approach that utilizes ChatGPT as an learning aided tool to solve problems in the field of chemical engineering. The research study aimed at addressing the limitation in chemical engineering education and deepen students’ understanding of core related subjects through focusing on practical problem-solving instead of theoretical knowledge. The researchers conducted an experimental lecture where they presented a simple example of using ChatGPT to ”calculate steam turbine cycle efficiency”. They also assigned projects to students to explore the potential applications of ChatGPT in solving problems in chemical engineering. The collected feedback from students was mixed, but overall, ChatGPT was reported to be an accessible and practical tool for improving their abilities in problem-solving. The researchers also highlighted the problem of Hallucination where generative AI models can generate realistic but inaccurate content, which could be misleading and negatively affects the learning process.

Wardat et al. (2023) reported their experience on using ChatGPT for teaching mathematics based on the results of a two-stage study to evaluate both content generated by ChatGPT and the perceptions of the users. The authors reported a limitation on ChatGPT capabilities in understanding geometry problems with a variation based on the the complexity of the equations, input data, and instructions given to ChatGPT. However, it is anticipated that the capabilities of ChatGPT will become more efficient in solving geometry problems in the future. Regarding the perceptions of the users, the public discourse on social media reflects increased interest for using ChatGPT in teaching mathematics. However, there are also voices of caution regarding its use in education. The findings of this study recommends further research to ensure the safe and responsible use of chatbots, particularly ChatGPT, into mathematics education and learning.

Despite the rapid increase in adopting generative AI models and ChatGPT in particular in several educational scenarios and applications, there is still limited research on how educators should properly use this technology to augment their instruction. For instance, the Authors of this study (Jeon & Lee, 2023) investigated how teachers and ChatGPT, a large language model chatbot, can work together to improve education. Eleven language teachers used ChatGPT in their classes for two weeks. After that, they shared their experiences in interviews and provided logs of their interactions with ChatGPT. The analysis of the data showed that ChatGPT could play four roles in education: ”interlocutor (having conversations with students), content provider (providing information), teaching assistant (helping students with their work), and evaluator (assessing student learning)”. These roles showed how ChatGPT could be used in different parts of the teaching process. Additionally, the data showed that teachers played three roles: ”(a) orchestrating different resources with good pedagogical decisions, (b) making students active learners, and (c) raising awareness of AI ethics”. These roles highlighted the importance of teachers’ teaching expertise in using AI tools effectively. The findings showed that teachers and AI can work together effectively to improve education. Teachers need to use their teaching expertise to incorporate AI tools into their instruction. The study provided insights into how large language model chatbots could be used in education in the future and why it is important for teachers and AI to work together.

Choudhury & Shamszare (2023) investigated the impact of users’ trust in ChatGPT on their intentions and actual usage of the technology. The study examined four hypotheses concerning the connection between trust, the intention to use, and the actual usage of ChatGPT. The authors conducted a web-based survey targeting adults in the United States who actively engage with ChatGPT (version 3.5) at least once a month. The responses from this survey were employed to construct two latent variables: Trust and Intention to Use, while Actual Usage served as the dependent variable. A total of 607 participants completed the survey, with primary ChatGPT use cases revolving around information acquisition, entertainment, and problem-solving. The results from the structural equation modeling revealed that trust exerts a substantial direct influence on both the intention to use ChatGPT and its actual usage. Results demonstrated a 50.5% of the variation in the intention to use and 9.8% of the variation in actual usage. Through bootstrapping, the authors validated all four null hypotheses, affirming the significant impact of trust on both intention and actual usage. Furthermore, they identified that trust indirectly affects actual usage, partially mediated by intention to use. These findings underscore the pivotal role of trust in the adoption of ChatGPT. Nevertheless, the authors also argues that, it’s essential to acknowledge that ChatGPT wasn’t explicitly designed for healthcare applications, and overreliance on using it for health-related advice can lead to misinformation and potential health hazards. The study stresses on the necessity of enhancing ChatGPT’s capacity to distinguish between queries it can properly answer and those that should be handled by human experts. Moreover, the study recommends shared responsibility and collaboration among developers, subject matter experts, and human factors researchers to mitigate the risks linked to an excessive trust in generative AI models such as ChatGPT.

Farrokhnia et al. (2023) conducted a SWOT analysis of ChatGPT to examine its strengths, weaknesses, opportunities, and threats in the context of education. The strengths of ChatGPT identified in the analysis include its ability to generate plausible answers using a sophisticated natural language model, its capacity for self-improvement, and its potential to provide personalized and real-time responses. These strengths suggest that ChatGPT can enhance access to information, facilitate personalized and complex learning experiences, and reduce the workload for educators, thereby improving efficiency in educational processes. However, the analysis also highlights several weaknesses of ChatGPT, such as its lack of deep understanding, lack of the ability to evaluating the quality of its responses, the potential for bias and discrimination, and its limited ability to foster higher-order thinking skills. These weaknesses pose challenges to its effective use in educational contexts. The study further discusses the opportunities that ChatGPT presents for education, including the potential for enhanced student engagement, individualized instruction, and support for diverse learners. It also explores the threats that ChatGPT poses to education, such as the risk of misunderstanding context, compromising academic integrity, perpetuating discrimination in educational practices, enabling plagiarism, and potentially diminishing higher-order cognitive skills.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.